Ernie 4.5

This model was released on 2025-06-30 and added to Hugging Face Transformers on 2025-07-21.

Ernie 4.5

Section titled “Ernie 4.5”Overview

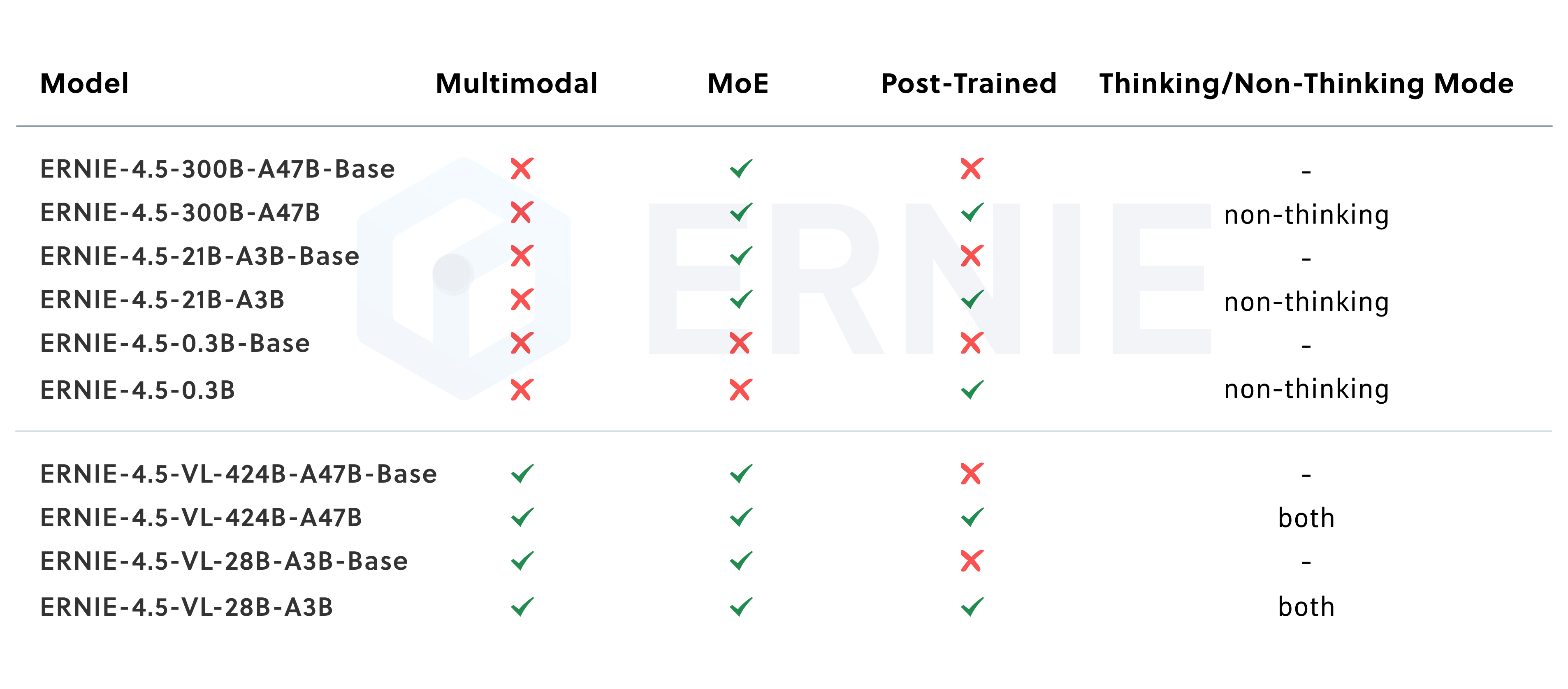

Section titled “Overview”The Ernie 4.5 model was released in the Ernie 4.5 Model Family release by baidu. This family of models contains multiple different architectures and model sizes. This model in specific targets the base text model without mixture of experts (moe) with 0.3B parameters in total. It uses the standard Llama at its core.

Other models from the family can be found at Ernie 4.5 Moe and Ernie 4.5 VL MoE.

Usage Tips

Section titled “Usage Tips”Generate text

Section titled “Generate text”import torchfrom transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "baidu/ERNIE-4.5-0.3B-PT"

# load the tokenizer and the modeltokenizer = AutoTokenizer.from_pretrained(model_name)model = AutoModelForCausalLM.from_pretrained( model_name, device_map="auto", dtype=torch.bfloat16,)

# prepare the model inputinputs = tokenizer("Hey, are you conscious? Can you talk to me?", return_tensors="pt")prompt = "Hey, are you conscious? Can you talk to me?"messages = [ {"role": "user", "content": prompt}]text = tokenizer.apply_chat_template( messages, tokenize=False, add_generation_prompt=True)model_inputs = tokenizer([text], add_special_tokens=False, return_tensors="pt").to(model.device)

# conduct text completiongenerated_ids = model.generate( **model_inputs, max_new_tokens=32,)output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

# decode the generated idsgenerate_text = tokenizer.decode(output_ids, skip_special_tokens=True)This model was contributed by Anton Vlasjuk. The original code can be found here.

Ernie4_5Config

Section titled “Ernie4_5Config”[[autodoc]] Ernie4_5Config

Ernie4_5Model

Section titled “Ernie4_5Model”[[autodoc]] Ernie4_5Model - forward

Ernie4_5ForCausalLM

Section titled “Ernie4_5ForCausalLM”[[autodoc]] Ernie4_5ForCausalLM - forward