SpQR

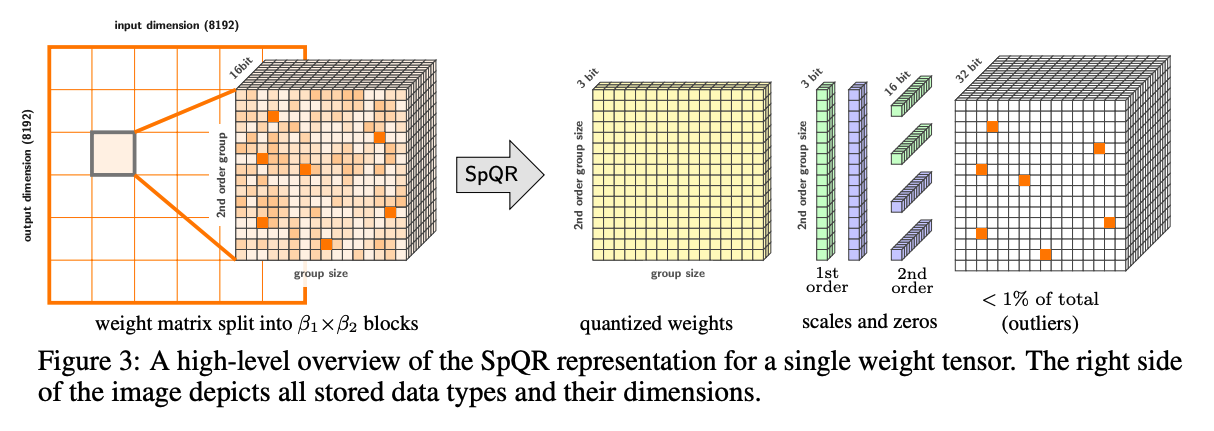

The SpQR quantization algorithm involves a 16x16 tiled bi-level group 3-bit quantization structure with sparse outliers.

Load a SpQR-quantized model with from_pretrained.

from transformers import AutoTokenizer, AutoModelForCausalLMimport torch

quantized_model = AutoModelForCausalLM.from_pretrained( "elvircrn/Llama-2-7b-SPQR-3Bit-16x16-red_pajama-hf", dtype=torch.half, device_map="auto")tokenizer = AutoTokenizer.from_pretrained("elvircrn/Llama-2-7b-SPQR-3Bit-16x16-red_pajama-hf")