InstructBlipVideo

This model was released on 2023-05-11 and added to Hugging Face Transformers on 2024-06-25.

InstructBlipVideo

Section titled “InstructBlipVideo”

Overview

Section titled “Overview”The InstructBLIPVideo is an extension of the models proposed in InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi. InstructBLIPVideo uses the same architecture as InstructBLIP and works with the same checkpoints as InstructBLIP. The only difference is the ability to process videos.

The abstract from the paper is the following:

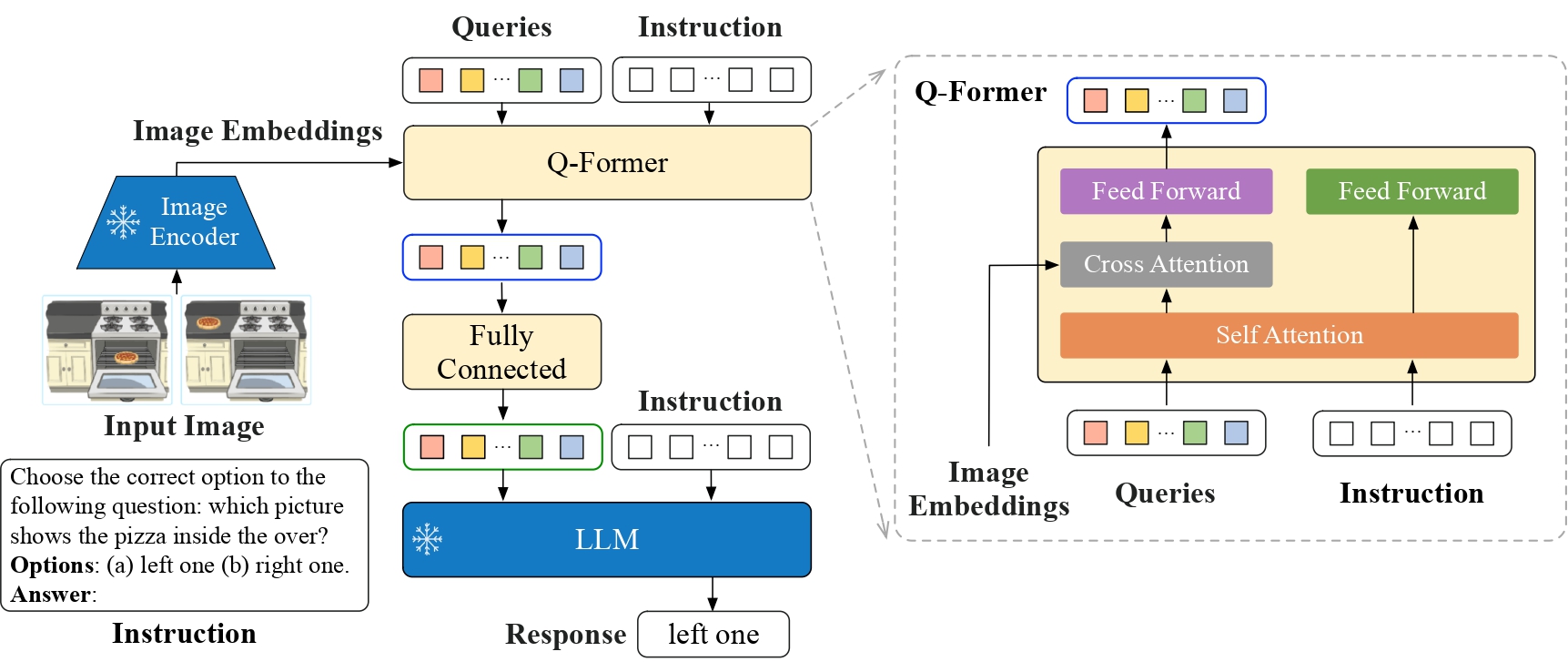

General-purpose language models that can solve various language-domain tasks have emerged driven by the pre-training and instruction-tuning pipeline. However, building general-purpose vision-language models is challenging due to the increased task discrepancy introduced by the additional visual input. Although vision-language pre-training has been widely studied, vision-language instruction tuning remains relatively less explored. In this paper, we conduct a systematic and comprehensive study on vision-language instruction tuning based on the pre-trained BLIP-2 models. We gather a wide variety of 26 publicly available datasets, transform them into instruction tuning format and categorize them into two clusters for held-in instruction tuning and held-out zero-shot evaluation. Additionally, we introduce instruction-aware visual feature extraction, a crucial method that enables the model to extract informative features tailored to the given instruction. The resulting InstructBLIP models achieve state-of-the-art zero-shot performance across all 13 held-out datasets, substantially outperforming BLIP-2 and the larger Flamingo. Our models also lead to state-of-the-art performance when finetuned on individual downstream tasks (e.g., 90.7% accuracy on ScienceQA IMG). Furthermore, we qualitatively demonstrate the advantages of InstructBLIP over concurrent multimodal models.

InstructBLIPVideo architecture. Taken from the original paper.

This model was contributed by RaushanTurganbay. The original code can be found here.

Usage tips

Section titled “Usage tips”- The model was trained by sampling 4 frames per video, so it’s recommended to sample 4 frames

The attributes can be obtained from model config, as model.config.num_query_tokens and model embeddings expansion can be done by following this link.

InstructBlipVideoConfig

Section titled “InstructBlipVideoConfig”[[autodoc]] InstructBlipVideoConfig

InstructBlipVideoVisionConfig

Section titled “InstructBlipVideoVisionConfig”[[autodoc]] InstructBlipVideoVisionConfig

InstructBlipVideoQFormerConfig

Section titled “InstructBlipVideoQFormerConfig”[[autodoc]] InstructBlipVideoQFormerConfig

InstructBlipVideoProcessor

Section titled “InstructBlipVideoProcessor”[[autodoc]] InstructBlipVideoProcessor

InstructBlipVideoVideoProcessor

Section titled “InstructBlipVideoVideoProcessor”[[autodoc]] InstructBlipVideoVideoProcessor - preprocess

InstructBlipVideoVisionModel

Section titled “InstructBlipVideoVisionModel”[[autodoc]] InstructBlipVideoVisionModel - forward

InstructBlipVideoQFormerModel

Section titled “InstructBlipVideoQFormerModel”[[autodoc]] InstructBlipVideoQFormerModel - forward

InstructBlipVideoModel

Section titled “InstructBlipVideoModel”[[autodoc]] InstructBlipVideoModel - forward

InstructBlipVideoForConditionalGeneration

Section titled “InstructBlipVideoForConditionalGeneration”[[autodoc]] InstructBlipVideoForConditionalGeneration - forward - generate